Planet Python

Last update: April 01, 2025 01:43 PM UTC

April 01, 2025

Zero to Mastery

[March 2025] Python Monthly Newsletter 🐍

64th issue of Andrei Neagoie's must-read monthly Python Newsletter: Django Got Forked, The Science of Troubleshooting, Python 3.13 TLDR, and much more. Read the full newsletter to get up-to-date with everything you need to know from last month.

Tryton News

Newsletter April 2025

Last month we focused on fixing bugs, improving the behaviour of things, speeding-up performance issues - building on the changes from our last release. We also added some new features which we would like to introduce to you in this newsletter.

For an in depth overview of the Tryton issues please take a look at our issue tracker or see the issues and merge requests filtered by label.

Changes for the User

CRM, Sales, Purchases and Projects

Now we notify the user when trying to add a duplicate contact mechanism.

Add quotation validity date on sale and purchase quotations.

On sale we compute the validity date when it goes to state quotation and display the validity date in the report. On purchase we set the date directly.

It is a common practice among other things to answer a complain by giving a promotion coupon to the customer. Now the user can create a coupon from the sale complain as an action.

We now use the actual quantity of a sale line when executing a sale complaint,

when the product is already selected.

Now we add an relate to open all products of sales, to be able to check all the sold products (for quantity or price).

We simplify the coupon number management and added a menu entry for promotion coupon numbers.

Now we display a coupon form on promotions and we remove the name field on promotion coupons.

Accounting, Invoicing and Payments

Now we allow to download all pending SEPA messages in a single message report.

We now replace the maturity date on account move by a combined payable/receivable date field which contains a given maturity date and if it is empty, falls back to the effective date. This provides a better chronology of the move lines.

On account move we now replace the post_number by the number-field. The original functionality of the number field, - delivering a sequential number for account moves in draft state, - is replaced by the account move id.

We now add some common payment terms:

- Upon Receipt

- Net 10, 15, 30, 60 days

- Net 30, 60 days End of Month

- End of Month

- End of Month Following

Now we display an optional company-field on the payment and group list.

We now add tax identifiers to the company. A company may have two tax identifiers, one used for transactions inland and another used abroad. Now it is possible to select the company tax identifier based on rules.

Now we make the deposit-field optional on party list view.

We now use the statement date to compute the start balance instead of always using the last end balance.

Now we make entries in analytic accounting read-only depending on their origin state.

We now allow to delete landed costs only if they are cancelled.

Now we add the company field optionally to the SEPA mandate list.

Stock, Production and Shipments

We now add the concept of product place also to the inventory line, because some users may want to see the place when doing inventory so they know where to count the products exactly.

Now we display the available quantity when searching in a stock move

and if the product is already selected:

We now ship packages of internal shipments with transit.

Now we do no longer force to fill an incoterm when shipping inside Europe.

User Interface

In the web client now we scroll to the first selected element in the tree view, when switching from form view.

Now we add a color widget to the form view.

Also we now add an icon of type

color, to display the color visually in a tree view. We extend the image type to add color which just displays an image filled with color.

Now we deactivate the open-button of the One2Many widget, if there is no form view.

In the desktop client we now include the version number on the new version available message.

System Data and Configuration

In the web user form we now use the same structure as in user form.

Now we make the product attribute names unique. Because the name of the attributes are used as keys of a fields.Dict.

We now add the Yapese currency Rai.

Now we order the incoming documents by their descending ID, with the most recent documents on top.

New Documentation

Now we add an example of a payment term with multiple deltas.

We now reworked the web_shop_shopify module documentation.

New Releases

We released bug fixes for the currently maintained long term support series

7.0 and 6.0, and for the penultimate series 7.4 and 7.2.

Changes for Implementers and Developers

Now we raise UserErrors from the database exceptions, to log more information on data and integrity errors.

In the desktop client we now remove the usage of GenericTreeModel, the last remaining part of pygtkcompat in Tryton.

We now make it easy to extend the Sendcloud sender address with a pattern.

Now we set a default value for all fields of a wizard state view.

If the client does not display a field of a state view, the value of this field on the instance record is not a defined attribute. So we need to access it using getattr with a default value, but in theory this can happen for any state in any record as user can extend any view.

We now store the last version series to which the database was updated in ir.configuration. With this information, the list of databases is filtered to the same client series. The remote access to a database is restricted to databases available in the list. We now also return the series instead of the version for remote call.

Authors: @dave @pokoli @udono spoiler

1 post - 1 participant

Wingware

Wing Python IDE 11 Early Access - March 27, 2025

Wing 11 is now available as an early access release, with improved AI assisted development, support for the uv package manager, improved Python code analysis, improved custom key binding assignment user interface, improved diff/merge, a new preference to auto-save files when Wing loses the application focus, updated German, French and Russian localizations (partly using AI), a new experimental AI-driven Spanish localization, and other bug fixes and minor improvements.

You can participate in the early access program simply by downloading the early access releases. We ask only that you keep your feedback and bug reports private by submitting them through Wing's Help menu or by emailing us at support@wingware.com.

Downloads

Wing 10 and earlier versions are not affected by installation of Wing 11 and may be installed and used independently. However, project files for Wing 10 and earlier are converted when opened by Wing 11 and should be saved under a new name, since Wing 11 projects cannot be opened by older versions of Wing.

New in Wing 11

New in Wing 11

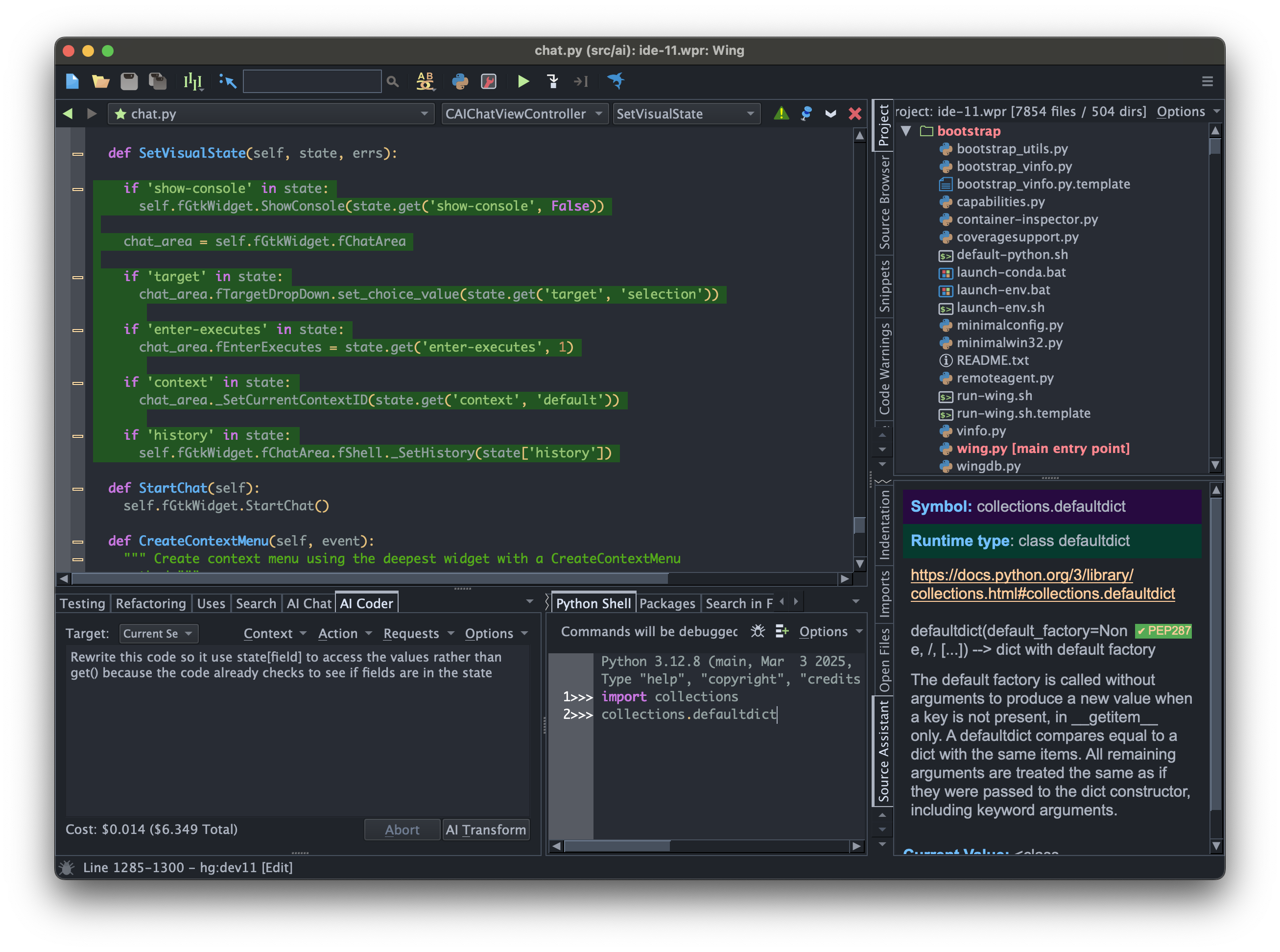

Improved AI Assisted Development

Wing 11 improves the user interface for AI assisted development by introducing two separate tools AI Coder and AI Chat. AI Coder can be used to write, redesign, or extend code in the current editor. AI Chat can be used to ask about code or iterate in creating a design or new code without directly modifying the code in an editor.

This release also improves setting up AI request context, so that both automatically and manually selected and described context items may be paired with an AI request. AI request contexts can now be stored, optionally so they are shared by all projects, and may be used independently with different AI features.

AI requests can now also be stored in the current project or shared with all projects, and Wing comes preconfigured with a set of commonly used requests. In addition to changing code in the current editor, stored requests may create a new untitled file or run instead in AI Chat. Wing 11 also introduces options for changing code within an editor, including replacing code, commenting out code, or starting a diff/merge session to either accept or reject changes.

Wing 11 also supports using AI to generate commit messages based on the changes being committed to a revision control system.

You can now also configure multiple AI providers for easier access to different models. However, as of this release, OpenAI is still the only supported AI provider and you will still need a paid OpenAI account and API key. We recommend paying for Tier 2 or better rate limits.

For details see AI Assisted Development under Wing Manual in Wing 11's Help menu.

Package Management with uv

Wing Pro 11 adds support for the uv package manager in the New Project dialog and the Packages tool.

For details see Project Manager > Creating Projects > Creating Python Environments and Package Manager > Package Management with uv under Wing Manual in Wing 11's Help menu.

Improved Python Code Analysis

Wing 11 improves code analysis of literals such as dicts and sets, parametrized type aliases, typing.Self, type variables on the def or class line that declares them, generic classes with [...], and __all__ in *.pyi files.

Updated Localizations

Wing 11 updates the German, French, and Russian localizations, and introduces a new experimental AI-generated Spanish localization. The Spanish localization and the new AI-generated strings in the French and Russian localizations may be accessed with the new User Interface > Include AI Translated Strings preference.

Improved diff/merge

Wing Pro 11 adds floating buttons directly between the editors to make navigating differences and merging easier, allows undoing previously merged changes, and does a better job managing scratch buffers, scroll locking, and sizing of merged ranges.

For details see Difference and Merge under Wing Manual in Wing 11's Help menu.

Other Minor Features and Improvements

Wing 11 also improves the custom key binding assignment user interface, adds a Files > Auto-Save Files When Wing Loses Focus preference, warns immediately when opening a project with an invalid Python Executable configuration, allows clearing recent menus, expands the set of available special environment variables for project configuration, and makes a number of other bug fixes and usability improvements.

Changes and Incompatibilities

Since Wing 11 replaced the AI tool with AI Coder and AI Chat, and AI configuration is completely different than in Wing 10, so you will need to reconfigure your AI integration manually in Wing 11. This is done with Manage AI Providers in the AI menu or the Options menu in either AI tool. After adding the first provider configuration, Wing will set that provider as the default.

If you have questions about any of this, please don't hesitate to contact us at support@wingware.com.

Glyph Lefkowitz

A Bigger Database

A Database File

When I was 10 years old, and going through a fairly difficult time, I was lucky enough to come into the possession of a piece of software called Claris FileMaker Pro™.

FileMaker allowed its users to construct arbitrary databases, and to associate their tables with a customized visual presentation. FileMaker also had a rudimentary scripting language, which would allow users to imbue these databases with behavior.

As a mentally ill pre-teen, lacking a sense of control over anything or anyone in my own life, including myself, I began building a personalized database to catalogue the various objects and people in my immediate vicinity. If one were inclined to be generous, one might assess this behavior and say I was systematically taxonomizing the objects in my life and recording schematized information about them.

As I saw it at the time, if I collected the information, I could always use it later, to answer questions that I might have. If I didn’t collect it, then what if I needed it? Surely I would regret it! Thus I developed a categorical imperative to spend as much of my time as possible collecting and entering data about everything that I could reasonably arrange into a common schema.

Having thus summoned this specter of regret for all lost data-entry opportunities, it was hard to dismiss. We might label it “Claris’s Basilisk”, for obvious reasons.

Therefore, a less-generous (or more clinically-minded) observer might have replaced the word “systematically” with “obsessively” in the assessment above.

I also began writing what scripts were within my marginal programming abilities at the time, just because I could: things like computing the sum of every street number of every person in my address book. Why was this useful? Wrong question: the right question is “was it possible” to which my answer was “yes”.

If I was obliged to collect all the information which I could observe — in case it later became interesting — I was similarly obliged to write and run every program I could. It might, after all, emit some other interesting information.

I was an avid reader of science fiction as well.

I had this vague sense that computers could kind of think. This resulted in a chain of reasoning that went something like this:

- human brains are kinda like computers,

- the software running in the human brain is very complex,

- I could only write simple computer programs, but,

- when you really think about it, a “complex” program is just a collection of simpler programs

Therefore: if I just kept collecting data, collecting smaller programs that could solve specific problems, and connecting them all together in one big file, eventually the database as a whole would become self-aware and could solve whatever problem I wanted. I just needed to be patient; to “keep grinding” as the kids would put it today.

I still feel like this is an understandable way to think — if you are a highly depressed and anxious 10-year-old in 1990.

Anyway.

35 Years Later

OpenAI is a company that produces transformer architecture machine learning generative AI models; their current generation was trained on about 10 trillion words, obtained in a variety of different ways from a large variety of different, unrelated sources.

A few days ago, on March 26, 2025 at 8:41 AM Pacific Time, Sam Altman took to “X™, The Everything App™,” and described the trajectory of his career of the last decade at OpenAI as, and I quote, a “grind for a decade trying to help make super-intelligence to cure cancer or whatever” (emphasis mine).

I really, really don’t want to become a full-time AI skeptic, and I am not an expert here, but I feel like I can identify a logically flawed premise when I see one.

This is not a system-design strategy. It is a trauma response.

You can’t cure cancer “or whatever”. If you want to build a computer system that does some thing, you actually need to hire experts in that thing, and have them work to both design and validate that the system is fit for the purpose of that thing.

Aside: But... are they, though?

I am not an oncologist; I do not particularly want to be writing about the specifics here, but, if I am going to make a claim like “you can’t cure cancer this way” I need to back it up.

My first argument — and possibly my strongest — is that cancer is not cured.

QED.

But I guess, to Sam’s credit, there is at least one other company partnering with OpenAI to do things that are specifically related to cancer. However, that company is still in a self-described “initial phase” and it’s not entirely clear that it is going to work out very well.

Almost everything I can find about it online was from a PR push in the middle of last year, so it all reads like a press release. I can’t easily find any independently-verified information.

A lot of AI hype is like this. A promising demo is delivered; claims are made that surely if the technology can solve this small part of the problem now, within 5 years surely it will be able to solve everything else as well!

But even the light-on-content puff-pieces tend to hedge quite a lot. For example, as the Wall Street Journal quoted one of the users initially testing it (emphasis mine):

The most promising use of AI in healthcare right now is automating “mundane” tasks like paperwork and physician note-taking, he said. The tendency for AI models to “hallucinate” and contain bias presents serious risks for using AI to replace doctors. Both Color’s Laraki and OpenAI’s Lightcap are adamant that doctors be involved in any clinical decisions.

I would probably not personally characterize “‘mundane’ tasks like paperwork and … note-taking” as “curing cancer”. Maybe an oncologist could use some code I developed too; even if it helped them, I wouldn’t be stealing valor from them on the curing-cancer part of their job.

Even fully giving it the benefit of the doubt that it works great, and improves patient outcomes significantly, this is medical back-office software. It is not super-intelligence.

It would not even matter if it were “super-intelligence”, whatever that means, because “intelligence” is not how you do medical care or medical research. It’s called “lab work” not “lab think”.

To put a fine point on it: biomedical research fundamentally cannot be done entirely by reading papers or processing existing information. It cannot even be done by testing drugs in computer simulations.

Biological systems are enormously complex, and medical research on new therapies inherently requires careful, repeated empirical testing to validate the correspondence of existing research with reality. Not “an experiment”, but a series of coordinated experiments that all test the same theoretical model. The data (which, in an LLM context, is “training data”) might just be wrong; it may not reflect reality, and the only way to tell is to continuously verify it against reality.

Previous observations can be tainted by methodological errors, by data fraud, and by operational mistakes by practitioners. If there were a way to do verifiable development of new disease therapies without the extremely expensive ladder going from cell cultures to animal models to human trials, we would already be doing it, and “AI” would just be an improvement to efficiency of that process. But there is no way to do that and nothing about the technologies involved in LLMs is going to change that fact.

Knowing Things

The practice of science — indeed any practice of the collection of meaningful information — must be done by intentionally and carefully selecting inclusion criteria, methodically and repeatedly curating our data, building a model that operates according to rules we understand and can verify, and verifying the data itself with repeated tests against nature. We cannot just hoover up whatever information happens to be conveniently available with no human intervention and hope it resolves to a correct model of reality by accident. We need to look where the keys are, not where the light is.

Piling up more and more information in a haphazard and increasingly precarious pile will not allow us to climb to the top of that pile, all the way to heaven, so that we can attack and dethrone God.

Eventually, we’ll just run out of disk space, and then lose the database file when the family gets a new computer anyway.

Acknowledgments

Thank you to my patrons who are supporting my writing on this blog. Special thanks also to Ben Chatterton for a brief pre-publication review; any errors remain my own. If you like what you’ve read here and you’d like to read more of it, or you’d like to support my various open-source endeavors, you can support my work as a sponsor! Special thanks also to Itamar Turner-Trauring and Thomas Grainger for pre-publication feedback on this article; any errors of course remain my own.

March 31, 2025

Ari Lamstein

censusdis v1.4.0 is now on PyPI

I recently contributed a new module to the censusdis package. This resulted in a new version of the package being pushed to PyPI. You can install it like this:

$ pip install censusdis -U #Verify that the installed version is 1.4.0 $ pip freeze | grep censusdis censusdis==1.4.0

The module I created is called multiyear. It is very similar to the utils module I created for my hometown_analysis project. This notebook demonstrates how to use the module. You can view the PR for the module here.

This PR caused me to grow as a Python programmer. Since many of my readers are looking to improve their technical skills, I thought to write down some of my lessons learned.

Python Files, Modules vs. Packages

The vocabulary around files, modules and packages in Python is confusing. This PR is when the terms finally clicked:

- A module is just a normal file with Python code (really). I am not sure why Python invented a new word for this. My best guess is to acknowledge that you can selectively import symbols from a module. This is different than C++ (the first language I programmed in professionally), where

#include <file>imports all of the target file. - A package is just a directory that contains Python modules. The best practice appears to be putting a file named

__init__.pyin the directory to denote that it’s a package. Although the file can be empty and is not strictly necessary (link). This also seems like an odd design decision.

One nice thing about this system is that it allows a package to span multiple (sub)directories. In R, all the code for a package must be in a single directory. I always felt that this limited the complexity of packages in R. It’s nice that Python doesn’t have that limitation.

Dependency Management

Python programmers like to talk about “dependency management hell.” This project gave me my first taste of that.

The initial version of the multiyear module used plotly to make the output of graph_multiyear interactive. I used it to do exploratory data analysis in Jupyter notebooks. However, when I tried to share those notebooks via github the images didn’t render: apparently Jupyter notebooks in github cannot render Javascript. The solution I stumbled upon is described here and requires the kaleido package.

The issue? Apparently this solution works with kaleido v0.2.0, but not the latest version of kaleido (link). So anyone who wants this functionality will need to install a specific version of kaleido. In Python this is known as “pinning” a dependency.

Technically, I believe you can do this by modifying the project’s pyproject.toml file by hand. But in practice people use tools like uv or poetry to both manage this file and create a “lockfile” which states the exact version of all packages you’re using. In this project I got experience doing this with both uv (which I used for my hometown_analysis repo) and poetry (which censusdis uses).

Linting

At my last job I advocated for having all the data scientists use a Style Guide. At that company we used R, and people were ok giving up some issues of personal taste in order to make collaboration easier. The process of enforcing adherence to a style guide (or running automated checks on code to detect errors) is called “linting”, and it’s a step we did not take.

In my hometown_analysis repo I regularly used black for this. It appears that black is the most widely used code formatter in the Python world. It was my first time using it on a project, and I simply ran it myself prior to checking in code.

The Censusdis repo takes this a step further:

- In addition to running black, it recommends contributors also run flake8 and ruff on their code prior to making a PR. For better or for worse, Python seems to have a lot of tools that do linting. There appears to be some overlap in what they all do, and I can’t speak to the unique differences between them. One thing that surprised me is that at least one of them was particular about the format in which I wrote documentation for my functions.

- It automatically runs all of these tools on each PR using Github Actions (link). If any of the linter tools detects an issue it causes the PR to fail the automated test suite.

Automated Tests

Speaking of tests: I did not feel the need to write them for my utils module for the hometown_analysis project. But censusdis uses pytest and has 99% test coverage (link). So it seemed appropriate to add tests to the multiyear module.

Writing tests is something that I’ve done occasionally throughout my career. Pytest was covered in Matt Harrison’s Professional Python course that I took last year, but I found that I forgot a lot of the material. So I did what most engineers would do: I looked at examples in the codebase and used an LLM to help me.

Type Annotations

I have mixed feelings about Python’s use of Type Annotations.

I began my software engineering career using C++, which is a statically typed language. Every variable in a C++ program must have a type defined at compile time (i.e. before the program executes). Python does not have this requirement, which I initially found freeing. Type annotations, I find, remove a lot of this freedom and also make the code a bit harder to read.

That being said, the censusdis package uses them throughout the codebase, so I added them to my module.

In Professional Python I was taught to run mypy to type check my type annotations. While I believe that my code passed without error, I noticed that the project had a few errors that were not covered in my course. For example:

cli/cli.py:9: error: Skipping analyzing "geopandas": module is installed, but missing library stubs or py.typed marker

It appears that type annotations become more complex when your code uses types defined by third-party libraries (such as Pandas and, in this case, GeoPandas). I researched these errors briefly and created a github issue for them.

Code Review

A major source of learning comes when someone more experienced than you reviews your code. This was one of the main reasons I chose to do this project: Darren (the maintainer of censusdis) is much more experienced than me at building Python packages, and I was interested in his feedback on my module.

Interestingly, his initial feedback was that it would be better if the graph_multiyear function used matplotlib instead of plotly. Not because matplotlib is better than plotly, but because other parts of censusdis already use matplotlib. And there’s value in a package having consistency in terms of which visualization package it uses. This made sense to me, although I do miss the interactive plots that plotly provided!

Conclusion

The book Software Engineering at Google defines software engineering as “programming integrated over time.” The idea is that when code is written for a small project, software engineering best practices aren’t that important. But when code is used over a long period of time, they become essential. This idea stayed with me throughout this project.

-

- The first time I did a multi-year analysis of ACS data was for my Covid Demographics Explorer, which I completed last June. I considered the project a one-off. I wrote a single script to download the data and an app to visualize it.

- For my hometown_analysis project I wanted to do exploratory data analysis of several variables over time. So I wrote a handful of functions to download and visualize multi-year ACS data. I put all the code in a single module and pinned the dependencies. I wrote docstrings for all the functions. I reasoned that if I ever want to do a similar analysis in the future then I could reuse the code.

- When I wanted to make it easier for others to use the code I added it to an existing package. That required being more rigorous about coding style, adding automated tests and type annotations. It also required me to make design decisions that are best for the overall package, even when they conflict with design decisions I made when working on the module independently.

My impression is that a lot of Python programmers (especially data scientists) have never contributed their code to an existing package. If you are given the opportunity, then I recommend giving it a shot. I found that it helped me grow as a Python programmer.

While I have disabled comments on my blog, I welcome hearing from reader. Use this form to contact me.

Real Python

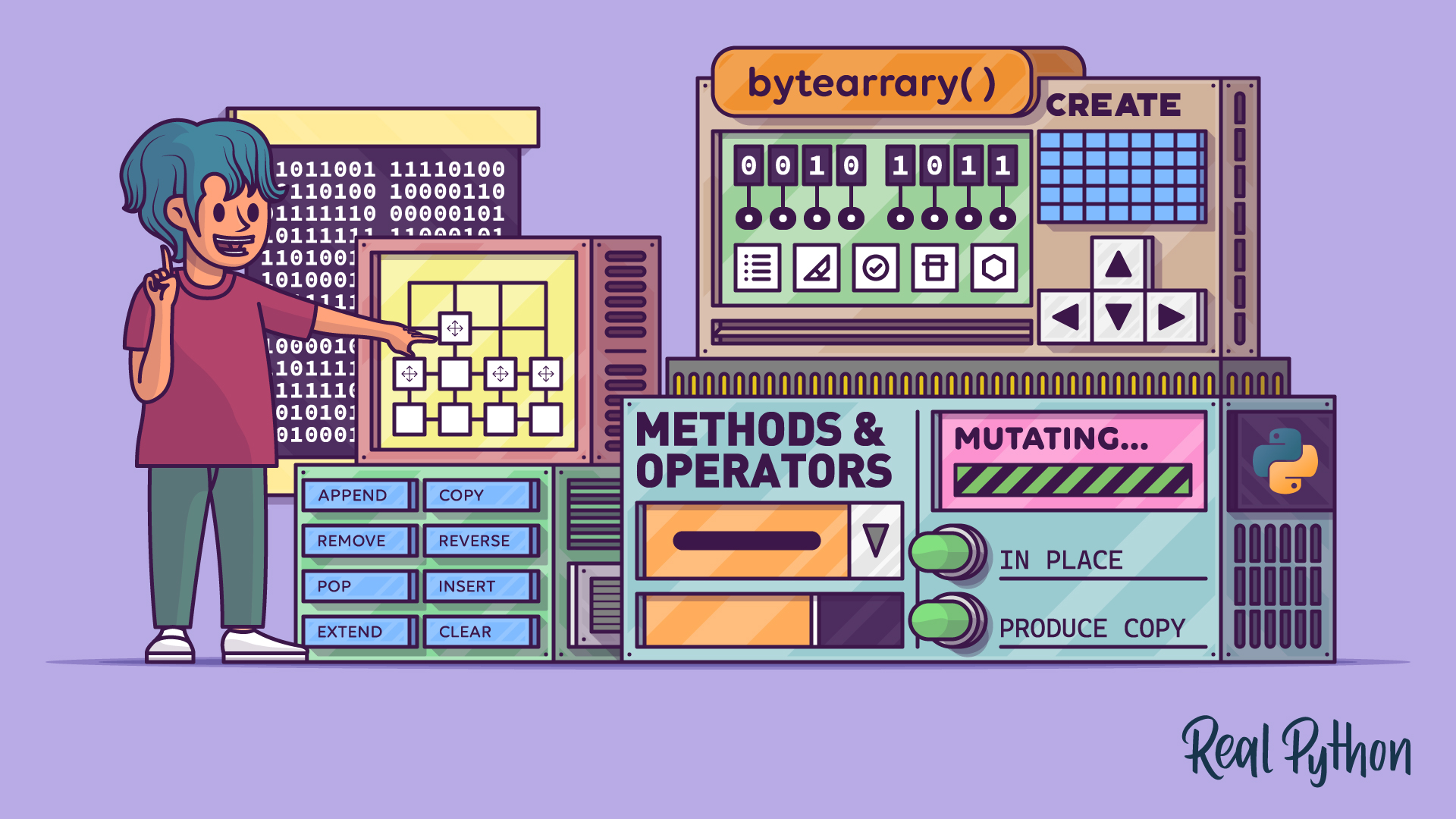

Python's Bytearray: A Mutable Sequence of Bytes

Python’s bytearray is a mutable sequence of bytes that allows you to manipulate binary data efficiently. Unlike immutable bytes, bytearray can be modified in place, making it suitable for tasks requiring frequent updates to byte sequences.

You can create a bytearray using the bytearray() constructor with various arguments or from a string of hexadecimal digits using .fromhex(). This tutorial explores creating, modifying, and using bytearray objects in Python.

By the end of this tutorial, you’ll understand that:

- A

bytearrayin Python is a mutable sequence of bytes that allows in-place modifications, unlike the immutablebytes. - You create a

bytearrayby using thebytearray()constructor with a non-negative integer, iterable of integers, bytes-like object, or a string with specified encoding. - You can modify a

bytearrayin Python by appending, slicing, or changing individual bytes, thanks to its mutable nature. - Common uses for

bytearrayinclude processing large binary files, working with network protocols, and tasks needing frequent updates to byte sequences.

You’ll dive deeper into each aspect of bytearray, exploring its creation, manipulation, and practical applications in Python programming.

Get Your Code: Click here to download the free sample code that you’ll use to learn about Python’s bytearray data type.

Take the Quiz: Test your knowledge with our interactive “Python's Bytearray” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Python's BytearrayIn this quiz, you'll test your understanding of Python's bytearray data type. By working through this quiz, you'll revisit the key concepts and uses of bytearray in Python.

Understanding Python’s bytearray Type

Although Python remains a high-level programming language, it exposes a few specialized data types that let you manipulate binary data directly should you ever need to. These data types can be useful for tasks such as processing custom binary file formats, or working with low-level network protocols requiring precise control over the data.

The three closely related binary sequence types built into the language are:

bytesbytearraymemoryview

While they’re all Python sequences optimized for performance when dealing with binary data, they each have slightly different strengths and use cases.

Note: You’ll take a deep dive into Python’s bytearray in this tutorial. But, if you’d like to learn more about the companion bytes data type, then check out Bytes Objects: Handling Binary Data in Python, which also covers binary data fundamentals.

As both names suggest, bytes and bytearray are sequences of individual byte values, letting you process binary data at the byte level. For example, you may use them to work with plain text data, which typically represents characters as unique byte values, depending on the given character encoding.

Python natively interprets bytes as 8-bit unsigned integers, each representing one of 256 possible values (28) between 0 and 255. But sometimes, you may need to interpret the same bit pattern as a signed integer, for example, when handling digital audio samples that encode a sound wave’s amplitude levels. See the section on signedness in the Python bytes tutorial for more details.

The choice between bytes and bytearray boils down to whether you want read-only access to the underlying bytes or not. Instances of the bytes data type are immutable, meaning each one has a fixed value that you can’t change once the object is created. In contrast, bytearray objects are mutable sequences, allowing you to modify their contents after creation.

While it may seem counterintuitive at first—since many newcomers to Python expect objects to be directly modifiable—immutable objects have several benefits over their mutable counterparts. That’s why types like strings, tuples, and others require reassignment in Python.

The advantages of immutable data types include better memory efficiency due to the ability to cache or reuse objects without unnecessary copying. In Python, immutable objects are inherently hashable, so they can become dictionary keys or set elements. Additionally, relying on immutable objects gives you extra security, data integrity, and thread safety.

That said, if you need a binary sequence that allows for modification, then bytearray is the way to go. Use it when you frequently perform in-place byte operations that involve changing the contents of the sequence, such as appending, inserting, extending, or modifying individual bytes. A scenario where bytearray can be particularly useful includes processing large binary files in chunks or incrementally reading messages from a network buffer.

The third binary sequence type in Python mentioned earlier, memoryview, provides a zero-overhead view into the memory of certain objects. Unlike bytes and bytearray, whose mutability status is fixed, a memoryview can be either mutable or immutable depending on the target object it references. Just like bytes and bytearray, a memoryview may represent a series of single bytes, but at the same time, it can represent a sequence of multi-byte words.

Now that you have a basic understanding of Python’s binary sequence types and where bytearray fits into them, you can explore ways to create and work with bytearray objects in Python.

Creating bytearray Objects in Python

Unlike the immutable bytes data type, whose literal form resembles a string literal prefixed with the letter b—for example, b"GIF89a"—the mutable bytearray has no literal syntax in Python. This distinction is important despite many similarities between both byte-oriented sequences, which you’ll discover in the next section.

The primary way to create new bytearray instances is by explicitly calling the type’s class constructor, sometimes informally known as the bytearray() built-in function. Alternatively, you can create a bytearray from a string of hexadecimal digits. You’ll learn about both methods next.

The bytearray() Constructor

Read the full article at https://realpython.com/python-bytearray/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

PyBites

Try an AI Speed Run For Your Next Side Project

The Problem

I have for as long as I can remember had a bit of a problem with analysis paralysis and tunnel vision.

If I’m working on a problem and get stuck, I have a tendency to just sit there paging through code trying to understand where to go next. It’s a very unproductive habit and one I’m committed to breaking, because the last thing you want is to lose hours of wall clock time with no progress on your work.

I was talking to my boss about this a few weeks back when I had a crazy idea: “Hey what if I wrote a program that looked for a particular key combo that I’d hit every time I make progress, and if a specified period e.g. 15 or 30 minutes go by with no progress, a loud buzzer gets played to remind me to ask for help, take a break, or just try something different.”

He thought this was a great idea, and suggested that this would be an ideal candidate to try as an “AI speed run”.

This article is a brief exploration of the process I used with some concrete hints on things that helped me make this project a success that you can use in your own coding “speed run” endeavors

Explain LIke The AI is 5

For purposes of this discussion I used ChatGPT with its GPT4.0 model. There’s nothing magical about that choice, you can use Claude or any other LLM that fits your needs.

Now comes the important part – coming up with the prompt! The first and most important part of building any program is coming to a full and detailed understanding of what you want to build.

Be as descriptive as you can, being sure to include all the most salient aspects of your project.

What does it do? Here’s where detail and specifics are super important. Where does it need to run? In a web browser? Windows? Mac? Linux? These are just examples of the kinds of detail you must include.

The initial prompt I came up with was: “Write a program that will run on Mac, Windows and Linux. The program should listen for a particular key combination, and if it doesn’t receive that combination within a prescribed (configurable) time, it plays a notification sound to the user.”.

Try, Try Again

Building software with a large language model isn’t like rubbing a magic lamp and making a wish, asking for your software to appear.

Instead, it’s more like having a conversation about what you want to build with an artist about something you want them to create for you.

The LLM is almost guaranteed to not produce exactly what you want on the first try. You can find the complete transcript of my conversation with ChatGPT for this project here.

Do take a moment to read through it a bit. Notice that on the first try it didn’t work at all, so I told it that and gave it the exact error. The fix it suggested wasn’t helping, so I did a tiny bit of very basic debugging and found that one of the modules it was suggested (the one for keyboard input) blew up as soon as I ran its import. So I told it that and suggested that the problem was with the other module that played the buzzer sound.

Progress Is A Change In Error Messages

Once we got past all the platform specific library shenanigans, there were structural issues with the code that needed to be addressed. When I ran the code it generated I got this:

UnboundLocalError: cannot access local variable 'watchdog_last_activity' where it is not associated with a valueSo I told it that by feeding the error back in. It then corrected course and generated the first fully working version of the program. Success!

And I don’t know about you, but a detail about this process that still amazes me? This whole conversation took less than an hour from idea to working program! That’s quite something.

Packaging And Polish

When Bob suggested that I should publish my project to the Python package repository I loved the idea, but I’d never done this before. Lately I’ve been using the amazing uv for all things package related. It’s an amazing tool!

So I dug into the documentation and started playing with my pyproject.toml. And if I’m honest? It wasn’t going very well. I kept trying to run uv publish and kept getting what seemed to me like inscrutable metadata errors

At moments like that I try to ask myself one simple question: “Am I following the happy path?” and in this case, the answer was no

When I started this project, I had used the uv init command to set up the project. I began to wonder whether I had set things up wrong, so I pored over the uv docs and one invocation of uv init --package later I had a buildable package that I could publish to pypi!

There was one bit of polish remaining before I felt like I could call this project “done” as a minimum viable product.

Buzzer, Buzzer, Who’s Got the Buzzer?

One of the things I’d struggled with since I first tried to package the program was where to put and how to bundle the sound file for the buzzer.

After trying various unsatisfying and sub-optimal things like asking the user to supply their own and using a command line argument to locate it, one of Bob’s early suggestions came to mind: I really needed to bundle the sound inside the package in such a way that the program could load it at run time.

LLM To The Res-Cue. Again!

One of the things you learn as you start working with large language models is that they act like a really good pair programming buddy. They offer another place to turn when you get stuck. So I asked ChatGPT:

Write a pyproject.toml for a Python package that includes code that loads a sound file from inside the package.

That did the trick! ChatGPT gave me the right pointers to include in my project toml file as well as the Python code to load the included sound file at run time!

Let AI Help You Boldly Go Where You’ve Never Been Before

As you can see from the final code, this program uses cross platform Python modules for sound playback and keyboard input and more importantly uses threads to manage the real time capture of keypresses while keeping track of the time.

I’ve been in this industry for over 30 years, and a recurring theme I’ve been hearing for most of that time is “Threads are hard”. And they are! But there are also cases like this where you can use them simply and reliably where they really make good sense! I know that now, and would feel comforable using them this way in a future project. There’s value in that! Any tool we can use to help us grow and improve our skills is one worth using, and if we take the time to understand the code AI generates for us it’s a good investment in my book!

Conclusions

I’m very grateful to my manager for having suggested that I try building this project as an “AI speed run”. It’s not something that would have occurred to me but in the end analysis it was a great experience from which I learned a lot.

Also? I’m super happy with the resulting tool and use it all the time now to ensure I don’t stay stuck and burn a ton of time making no progress!

You can see the project in its current state on my GitHub. There are lots of ideas I have for extending it in the future including a nice Textual interface and more polish around choosing the key chord and the “buzzer” sound.

Thanks for taking the time to read this. I hope that it inspires you to try your own AI speed run!

Talk Python to Me

#499: BeeWare and the State of Python on Mobile

This episode is all about Beeware, the project that working towards true native apps built on Python, especially for iOS and Android. Russell's been at this for more than a decade, and the progress is now hitting critical mass. We'll talk about the Toga GUI toolkit, building and shipping your apps with Briefcase, the newly official support for iOS and Android in CPython, and so much more. I can't wait to explore how BeeWare opens up the entire mobile ecosystem for Python developers, let's jump right in.<br/> <br/> <strong>Episode sponsors</strong><br/> <br/> <a href='https://talkpython.fm/workbench'>Posit</a><br> <a href='https://talkpython.fm/devopsbook'>Python in Production</a><br> <a href='https://talkpython.fm/training'>Talk Python Courses</a><br/> <br/> <h2 class="links-heading">Links from the show</h2> <div><strong>Anaconda open source team</strong>: <a href="https://www.anaconda.com/our-open-source-commitment?featured_on=talkpython" target="_blank" >anaconda.com</a><br/> <strong>PEP 730 – Adding iOS</strong>: <a href="https://peps.python.org/pep-0730/?featured_on=talkpython" target="_blank" >peps.python.org</a><br/> <strong>PEP 738 – Adding Android</strong>: <a href="https://peps.python.org/pep-0738/?featured_on=talkpython" target="_blank" >peps.python.org</a><br/> <strong>Toga</strong>: <a href="https://beeware.org/project/projects/libraries/toga/?featured_on=talkpython" target="_blank" >beeware.org</a><br/> <strong>Briefcase</strong>: <a href="https://beeware.org/project/projects/tools/briefcase/?featured_on=talkpython" target="_blank" >beeware.org</a><br/> <strong>emscripten</strong>: <a href="https://emscripten.org/?featured_on=talkpython" target="_blank" >emscripten.org</a><br/> <strong>Russell Keith-Magee - Keynote - PyCon 2019</strong>: <a href="https://www.youtube.com/watch?v=ftP5BQh1-YM&ab_channel=PyCon2019" target="_blank" >youtube.com</a><br/> <strong>Watch this episode on YouTube</strong>: <a href="https://www.youtube.com/watch?v=rSiq8iijkKg" target="_blank" >youtube.com</a><br/> <strong>Episode transcripts</strong>: <a href="https://talkpython.fm/episodes/transcript/499/beeware-and-the-state-of-python-on-mobile" target="_blank" >talkpython.fm</a><br/> <br/> <strong>--- Stay in touch with us ---</strong><br/> <strong>Subscribe to Talk Python on YouTube</strong>: <a href="https://talkpython.fm/youtube" target="_blank" >youtube.com</a><br/> <strong>Talk Python on Bluesky</strong>: <a href="https://bsky.app/profile/talkpython.fm" target="_blank" >@talkpython.fm at bsky.app</a><br/> <strong>Talk Python on Mastodon</strong>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" ><i class="fa-brands fa-mastodon"></i>talkpython</a><br/> <strong>Michael on Bluesky</strong>: <a href="https://bsky.app/profile/mkennedy.codes?featured_on=talkpython" target="_blank" >@mkennedy.codes at bsky.app</a><br/> <strong>Michael on Mastodon</strong>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" ><i class="fa-brands fa-mastodon"></i>mkennedy</a><br/></div>

Python Bytes

#426 Committing to Formatted Markdown

<strong>Topics covered in this episode:</strong><br> <ul> <li><a href="https://github.com/hukkin/mdformat?featured_on=pythonbytes"><strong>mdformat</strong></a></li> <li><strong><a href="https://github.com/tox-dev/pre-commit-uv?featured_on=pythonbytes">pre-commit-uv</a></strong></li> <li><strong>PEP 758 and 781</strong></li> <li><strong><a href="https://github.com/lusingander/serie?featured_on=pythonbytes">Serie</a>: rich git commit graph in your terminal, like magic <img src="https://paper.dropboxstatic.com/static/img/ace/emoji/1f4da.png?version=8.0.0" alt="books" /></strong></li> <li><strong>Extras</strong></li> <li><strong>Joke</strong></li> </ul><a href='https://www.youtube.com/watch?v=-hHtfY8gW_0' style='font-weight: bold;'data-umami-event="Livestream-Past" data-umami-event-episode="426">Watch on YouTube</a><br> <p><strong>About the show</strong></p> <p>Sponsored by <strong>Posit Connect Cloud</strong>: <a href="https://pythonbytes.fm/connect-cloud">pythonbytes.fm/connect-cloud</a></p> <p><strong>Connect with the hosts</strong></p> <ul> <li>Michael: <a href="https://fosstodon.org/@mkennedy"><strong>@mkennedy@fosstodon.org</strong></a> <strong>/</strong> <a href="https://bsky.app/profile/mkennedy.codes?featured_on=pythonbytes"><strong>@mkennedy.codes</strong></a> <strong>(bsky)</strong></li> <li>Brian: <a href="https://fosstodon.org/@brianokken"><strong>@brianokken@fosstodon.org</strong></a> <strong>/</strong> <a href="https://bsky.app/profile/brianokken.bsky.social?featured_on=pythonbytes"><strong>@brianokken.bsky.social</strong></a></li> <li>Show: <a href="https://fosstodon.org/@pythonbytes"><strong>@pythonbytes@fosstodon.org</strong></a> <strong>/</strong> <a href="https://bsky.app/profile/pythonbytes.fm"><strong>@pythonbytes.fm</strong></a> <strong>(bsky)</strong></li> </ul> <p>Join us on YouTube at <a href="https://pythonbytes.fm/stream/live"><strong>pythonbytes.fm/live</strong></a> to be part of the audience. Usually <strong>Monday</strong> at 10am PT. Older video versions available there too.</p> <p>Finally, if you want an artisanal, hand-crafted digest of every week of the show notes in email form? Add your name and email to <a href="https://pythonbytes.fm/friends-of-the-show">our friends of the show list</a>, we'll never share it. </p> <p><strong>Brian #1:</strong> <a href="https://github.com/hukkin/mdformat?featured_on=pythonbytes"><strong>mdformat</strong></a></p> <ul> <li>Suggested by Matthias Schöttle</li> <li><a href="https://pythonbytes.fm/episodes/show/425/if-you-were-a-klingon-programmer">Last episode </a>Michael covered blacken-docs, and I mentioned it’d be nice to have an autoformatter for text markdown.</li> <li>Matthias delivered with suggesting mdformat</li> <li>“Mdformat is an opinionated Markdown formatter that can be used to enforce a consistent style in Markdown files.”</li> <li>A python project that can be run on the command line.</li> <li>Uses a <a href="https://mdformat.readthedocs.io/en/stable/users/style.html?featured_on=pythonbytes">style guide</a> I mostly agree with. <ul> <li>I’m not a huge fan of numbered list items all being “1.”, but that can be turned off with --number, so I’m happy.</li> <li>Converts underlined headings to #, ##, etc. headings.</li> <li>Lots of other sane conventions.</li> <li>The numbering thing is also sane, I just think it also makes the raw markdown hard to read.</li> </ul></li> <li>Has a <a href="https://mdformat.readthedocs.io/en/stable/users/plugins.html?featured_on=pythonbytes">plugin system to format code blocks</a></li> </ul> <p><strong>Michael #2:</strong> <a href="https://github.com/tox-dev/pre-commit-uv?featured_on=pythonbytes">pre-commit-uv</a></p> <ul> <li>via Ben Falk</li> <li>Use uv to create virtual environments and install packages for pre-commit.</li> </ul> <p><strong>Brian #3:</strong> <strong>PEP 758 and 781</strong></p> <ul> <li><a href="https://peps.python.org/pep-0758/?featured_on=pythonbytes">PEP 758 – Allow except and except* expressions without parentheses</a> <ul> <li>accepted</li> </ul></li> <li><a href="https://peps.python.org/pep-0781/?featured_on=pythonbytes">PEP 781 – Make TYPE_CHECKING a built-in constant</a> <ul> <li>draft status</li> </ul></li> <li>Also,<a href="https://peps.python.org/pep-0000/#index-by-category"> PEP Index by Category </a>kinda rocks</li> </ul> <p><strong>Michael #4:</strong> <a href="https://github.com/lusingander/serie?featured_on=pythonbytes">Serie</a>: rich git commit graph in your terminal, like magic <img src="https://paper.dropboxstatic.com/static/img/ace/emoji/1f4da.png?version=8.0.0" alt="books" /></p> <ul> <li>While some users prefer to use Git via CLI, they often rely on a GUI or feature-rich TUI to view commit logs. </li> <li>Others may find git log --graph sufficient.</li> <li><strong>Goals</strong> <ul> <li>Provide a rich git log --graph experience in the terminal.</li> <li>Offer commit graph-centric browsing of Git repositories.</li> </ul></li> </ul> <p><img src="https://github.com/lusingander/serie/raw/master/img/demo.gif" alt="" /></p> <p><strong>Extras</strong> </p> <p>Michael:</p> <ul> <li><a href="https://mkennedy.codes/posts/sunsetting-search/?featured_on=pythonbytes">Sunsetting Search</a>? (<a href="https://www.startpage.com/?featured_on=pythonbytes">Startpage</a>)</li> <li><a href="https://fosstodon.org/@RhetTbull/114237153385659674">Ruff in or out</a>?</li> </ul> <p><strong>Joke:</strong> <a href="https://x.com/PR0GRAMMERHUM0R/status/1902299037652447410?featured_on=pythonbytes">Wishing for wishes</a></p>

Armin Ronacher

I'm Leaving Sentry

Every ending marks a new beginning, and today, is the beginning of a new chapter for me. Ten years ago I took a leap into the unknown, today I take another. After a decade of working on Sentry I move on to start something new.

Sentry has been more than just a job, it has been a defining part of my life. A place where I've poured my energy, my ideas, my heart. It has shaped me, just as I've shaped it. And now, as I step away, I do so with immense gratitude, a deep sense of pride, and a heart full of memories.

From A Chance Encounter

I've known David, Sentry's co-founder (alongside Chris), long before I was ever officially part of the team as our paths first crossed on IRC in the Django community. Even my first commit to Sentry predates me officially working there by a few years. Back in 2013, over conversations in the middle of Russia — at a conference that, incidentally, also led to me meeting my wife — we toyed with the idea of starting a company together. That exact plan didn't materialize, but the seeds of collaboration had been planted.

Conversations continued, and by late 2014, the opportunity to help transform Sentry (which already showed product market fit) into a much bigger company was simply too good to pass up. I never could have imagined just how much that decision would shape the next decade of my life.

To A Decade of Experiences

For me, Sentry's growth has been nothing short of extraordinary. At first, I thought reaching 30 employees would be our ceiling. Then we surpassed that, and the milestones just kept coming — reaching a unicorn valuation was something I once thought was impossible. While we may have stumbled at times, we've also learned immensely throughout this time.

I'm grateful for all the things I got to experience and there never was a dull moment. From representing Sentry at conferences, opening an engineering office in Vienna, growing teams, helping employees, assisting our licensing efforts and leading our internal platform teams. Every step and achievement drove me.

Yet for me, the excitement and satisfaction of being so close to the founding of a company, yet not quite a founder, has only intensified my desire to see the rest of it.

A Hard Goodbye

Walking away from something you love is never easy and leaving Sentry is hard. Really hard. Sentry has been woven into the very fabric of my adult life. Working on it hasn't just spanned any random decade; it perfectly overlapped with marrying my wonderful wife, and growing our family from zero to three kids.

And will it go away entirely? The office is right around the corner afterall. From now on, every morning, when I will grab my coffee, I will walk past it. The idea of no longer being part of the daily decisions, the debates, the momentum — it feels surreal. That sense of belonging to a passionate team, wrestling with tough decisions, chasing big wins, fighting fires together, sometimes venting about our missteps and discussing absurd and ridiculous trivia became part of my identity.

There are so many bright individuals at Sentry, and I'm incredibly proud of what we have built together. Not just from an engineering point of view, but also product, marketing and upholding our core values. We developed SDKs that support a wide array of platforms from Python to JavaScript to Swift to C++, lately expanding to game consoles. We stayed true to our Open Source principles, even when other options were available. For example, when we needed an Open Source PDB implementation for analyzing Windows crashes but couldn't find a suitable solution, we contributed to a promising Rust crate instead of relying on Windows VMs and Microsoft's dbghelp. When we started, our ingestion system handled a few thousand requests per second — now it handles well over a million.

While building an SDK may seem straightforward, maintaining and updating them to remain best-in-class over the years requires immense dedication. It takes determination to build something that works out of the box with little configuration. A lot of clever engineering and a lot of deliberate tradeoffs went into the product to arrive where it is. And ten years later, is a multi-product company. What started with just crashes, now you can send traces, profiles, sessions, replays and more.

We also stuck to our values. I'm pleased that we ran experiments with licensing despite all the push back we got over the years. We might not have found the right solution yet, but we pushed the conversation. The same goes for our commitment to funding of dependencies.

And Heartfelt Thank You

I feel an enormous amount of gratitude for those last ten years. There are so many people I owe thanks to. I owe eternal thanks to David Cramer and Chris Jennings for the opportunity and trust they placed in me. To Ben Vinegar for his unwavering guidance and support. To Dan Levine, for investing in us and believing in our vision. To Daniel Griesser, for being an exceptional first hire in Vienna, and shepherding our office there and growing it to 50 people. To Vlad Cretu, for bringing structure to our chaos over the years. To Milin Desai for taking the helm and growing us.

And most of all, to my wonderful wife, Maria — who has stood beside me through every challenge, who has supported me when the road was uncertain, and who has always encouraged me to forge my own path.

To everyone at Sentry, past and present — thank you. For the trust, the lessons, the late nights, the victories. For making Sentry what it is today.

Quo eo?

I'm fully aware it's a gamble to believe my next venture will find the same success as Sentry. The reality is that startups that achieve the kind of scale and impact Sentry has are incredibly rare. There's a measure of hubris in assuming lightning strikes twice, and as humbling as that realization is, it also makes me that much more determined. The creative spark that fueled me at Sentry isn't dimming. Not at all in fact: it burns brighter fueld by the feeling that I can explore new things, beckoning me. There's more for me to explore, and I'm ready to channel all that energy into a new venture.

Today, I stand in an open field, my backpack filled with experiences and a renewed sense of purpose. That's because the world has changed a lot in the past decade, and so have I. What drives me now is different from what drove me before, and I want my work to reflect that evolution.

At my core, I'm still inspired by the same passion — seeing others find value in what I create, but my perspective has expanded. While I still take great joy in building things that help developers, I want to broaden my reach. I may not stray far from familiar territory, but I want to build something that speaks to more people, something that, hopefully, even my children will find meaningful.

Watch this space, as they say.

March 29, 2025

Ned Batchelder

Human sorting improved

When sorting strings, you’d often like the order to make sense to a person. That means numbers need to be treated numerically even if they are in a larger string.

For example, sorting Python versions with the default sort() would give you:

Python 3.10

Python 3.11

Python 3.9

when you want it to be:

Python 3.9

Python 3.10

Python 3.11

I wrote about this long ago (Human sorting), but have continued to tweak the code and needed to add it to a project recently. Here’s the latest:

import re

def human_key(s: str) -> tuple[list[str | int], str]:

"""Turn a string into a sortable value that works how humans expect.

"z23A" -> (["z", 23, "a"], "z23A")

The original string is appended as a last value to ensure the

key is unique enough so that "x1y" and "x001y" can be distinguished.

"""

def try_int(s: str) -> str | int:

"""If `s` is a number, return an int, else `s` unchanged."""

try:

return int(s)

except ValueError:

return s

return ([try_int(c) for c in re.split(r"(\d+)", s.casefold())], s)

def human_sort(strings: list[str]) -> None:

"""Sort a list of strings how humans expect."""

strings.sort(key=human_key)

The central idea here is to turn a string like "Python 3.9" into the

key ["Python ", 3, ".", 9] so that numeric components will be sorted by

their numeric value. The re.split() function gives us interleaved words and

numbers, and try_int() turns the numbers into actual numbers, giving us sortable

key lists.

There are two improvements from the original:

- The sort is made case-insensitive by using casefold() to lower-case the string.

- The key returned is now a two-element tuple: the first element is the list

of intermixed strings and integers that gives us the ordering we want. The

second element is the original string unchanged to ensure that unique strings

will always result in distinct keys. Without it,

"x1y"and"x001Y"would both produce the same key. This solves a problem that actually happened when sorting the items of a dictionary.# Without the tuple: different strings, same key!!

human_key("x1y") -> ["x", 1, "y"]

human_key("x001Y") -> ["x", 1, "y"]

# With the tuple: different strings, different keys.

human_key("x1y") -> (["x", 1, "y"], "x1y")

human_key("x001Y") -> (["x", 1, "y"], "x001Y")

If you are interested, there are many different ways to split the string into the word/number mix. The comments on the old post have many alternatives, and there are certainly more.

This still makes some assumptions about what is wanted, and doesn’t cover all possible options (floats? negative/positive? full file paths?). For those, you probably want the full-featured natsort (natural sort) package.

Python GUIs

PyQt6 Toolbars & Menus — QAction — Defining toolbars, menus, and keyboard shortcuts with QAction

Next, we'll look at some of the common user interface elements you've probably seen in many other applications — toolbars and menus. We'll also explore the neat system Qt provides for minimizing the duplication between different UI areas — QAction.

Basic App

We'll start this tutorial with a simple skeleton application, which we can customize. Save the following code in a file named app.py -- this code all the imports you'll need for the later steps:

from PyQt6.QtCore import QSize, Qt

from PyQt6.QtGui import QAction, QIcon, QKeySequence

from PyQt6.QtWidgets import (

QApplication,

QCheckBox,

QLabel,

QMainWindow,

QStatusBar,

QToolBar,

)

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

app = QApplication([])

window = MainWindow()

window.show()

app.exec()

This file contains the imports and the basic code that you'll use to complete the examples in this tutorial.

If you're migrating to PyQt6 from PyQt5, notice that QAction is now available via the QtGui module.

Toolbars

One of the most commonly seen user interface elements is the toolbar. Toolbars are bars of icons and/or text used to perform common tasks within an application, for which access via a menu would be cumbersome. They are one of the most common UI features seen in many applications. While some complex applications, particularly in the Microsoft Office suite, have migrated to contextual 'ribbon' interfaces, the standard toolbar is usually sufficient for the majority of applications you will create.

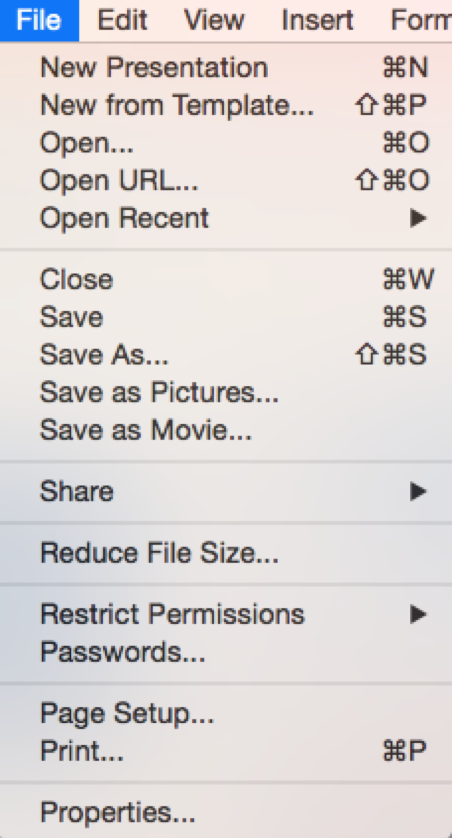

![]() Standard GUI elements

Standard GUI elements

Adding a Toolbar

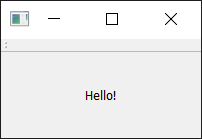

Let's start by adding a toolbar to our application.

In Qt, toolbars are created from the QToolBar class. To start, you create an instance of the class and then call addToolbar on the QMainWindow. Passing a string in as the first argument to QToolBar sets the toolbar's name, which will be used to identify the toolbar in the UI:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

self.addToolBar(toolbar)

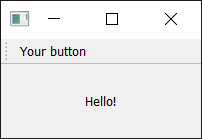

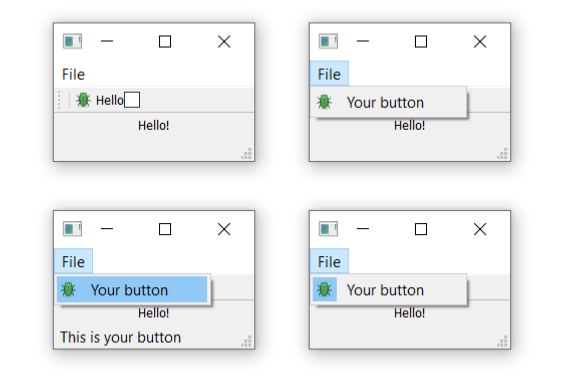

Run it! You'll see a thin grey bar at the top of the window. This is your toolbar. Right-click the name to trigger a context menu and toggle the bar off.

A window with a toolbar.

A window with a toolbar.

How can I get my toolbar back? Unfortunately, once you remove a toolbar, there is now no place to right-click to re-add it. So, as a general rule, you want to either keep one toolbar un-removeable, or provide an alternative interface in the menus to turn toolbars on and off.

We should make the toolbar a bit more interesting. We could just add a QButton widget, but there is a better approach in Qt that gets you some additional features — and that is via QAction. QAction is a class that provides a way to describe abstract user interfaces. What this means in English is that you can define multiple interface elements within a single object, unified by the effect that interacting with that element has.

For example, it is common to have functions that are represented in the toolbar but also the menu — think of something like Edit->Cut, which is present both in the Edit menu but also on the toolbar as a pair of scissors, and also through the keyboard shortcut Ctrl-X (Cmd-X on Mac).

Without QAction, you would have to define this in multiple places. But with QAction you can define a single QAction, defining the triggered action, and then add this action to both the menu and the toolbar. Each QAction has names, status messages, icons, and signals that you can connect to (and much more).

In the code below, you can see this first QAction added:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

self.addToolBar(toolbar)

button_action = QAction("Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

toolbar.addAction(button_action)

def toolbar_button_clicked(self, s):

print("click", s)

To start with, we create the function that will accept the signal from the QAction so we can see if it is working. Next, we define the QAction itself. When creating the instance, we can pass a label for the action and/or an icon. You must also pass in any QObject to act as the parent for the action — here we're passing self as a reference to our main window. Strangely, for QAction the parent element is passed in as the final argument.

Next, we can opt to set a status tip — this text will be displayed on the status bar once we have one. Finally, we connect the triggered signal to the custom function. This signal will fire whenever the QAction is triggered (or activated).

Run it! You should see your button with the label that you have defined. Click on it, and then our custom method will print "click" and the status of the button.

Toolbar showing our

Toolbar showing our QAction button.

Why is the signal always false? The signal passed indicates whether the button is checked, and since our button is not checkable — just clickable — it is always false. We'll show how to make it checkable shortly.

Next, we can add a status bar.

We create a status bar object by calling QStatusBar to get a new status bar object and then passing this into setStatusBar. Since we don't need to change the status bar settings, we can also just pass it in as we create it, in a single line:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

self.addToolBar(toolbar)

button_action = QAction("Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

toolbar.addAction(button_action)

self.setStatusBar(QStatusBar(self))

def toolbar_button_clicked(self, s):

print("click", s)

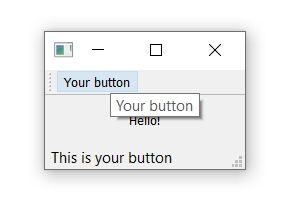

Run it! Hover your mouse over the toolbar button, and you will see the status text in the status bar.

Status bar text updated as we hover over the action.

Status bar text updated as we hover over the action.

Next, we're going to turn our QAction toggleable — so clicking will turn it on, and clicking again will turn it off. To do this, we simply call setCheckable(True) on the QAction object:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

self.addToolBar(toolbar)

button_action = QAction("Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

button_action.setCheckable(True)

toolbar.addAction(button_action)

self.setStatusBar(QStatusBar(self))

def toolbar_button_clicked(self, s):

print("click", s)

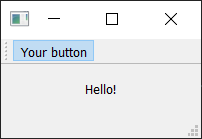

Run it! Click on the button to see it toggle from checked to unchecked state. Note that the custom slot method we create now alternates outputting True and False.

The toolbar button toggled on.

The toolbar button toggled on.

There is also a toggled signal, which only emits a signal when the button is toggled. But the effect is identical, so it is mostly pointless.

Things look pretty shabby right now — so let's add an icon to our button. For this, I recommend you download the fugue icon set by designer Yusuke Kamiyamane. It's a great set of beautiful 16x16 icons that can give your apps a nice professional look. It is freely available with only attribution required when you distribute your application — although I am sure the designer would appreciate some cash too if you have some spare.

![]() Fugue Icon Set — Yusuke Kamiyamane

Fugue Icon Set — Yusuke Kamiyamane

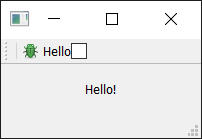

Select an image from the set (in the examples here, I've selected the file bug.png) and copy it into the same folder as your source code.

We can create a QIcon object by passing the file name to the class, e.g. QIcon("bug.png") -- if you place the file in another folder, you will need a full relative or absolute path to it.

Finally, to add the icon to the QAction (and therefore the button), we simply pass it in as the first argument when creating the QAction.

You also need to let the toolbar know how large your icons are. Otherwise, your icon will be surrounded by a lot of padding. You can do this by calling setIconSize() with a QSize object:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

toolbar.setIconSize(QSize(16, 16))

self.addToolBar(toolbar)

button_action = QAction(QIcon("bug.png"), "Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

button_action.setCheckable(True)

toolbar.addAction(button_action)

self.setStatusBar(QStatusBar(self))

def toolbar_button_clicked(self, s):

print("click", s)

Run it! The QAction is now represented by an icon. Everything should work exactly as it did before.

![]() Our action button with an icon.

Our action button with an icon.

Note that Qt uses your operating system's default settings to determine whether to show an icon, text, or an icon and text in the toolbar. But you can override this by using setToolButtonStyle(). This slot accepts any of the following flags from the Qt namespace:

| Flag | Behavior |

|---|---|

Qt.ToolButtonStyle.ToolButtonIconOnly |

Icon only, no text |

Qt.ToolButtonStyle.ToolButtonTextOnly |

Text only, no icon |

Qt.ToolButtonStyle.ToolButtonTextBesideIcon |

Icon and text, with text beside the icon |

Qt.ToolButtonStyle.ToolButtonTextUnderIcon |

Icon and text, with text under the icon |

Qt.ToolButtonStyle.ToolButtonFollowStyle |

Follow the host desktop style |

The default value is Qt.ToolButtonStyle.ToolButtonFollowStyle, meaning that your application will default to following the standard/global setting for the desktop on which the application runs. This is generally recommended to make your application feel as native as possible.

Finally, we can add a few more bits and bobs to the toolbar. We'll add a second button and a checkbox widget. As mentioned, you can literally put any widget in here, so feel free to go crazy:

from PyQt6.QtCore import QSize, Qt

from PyQt6.QtGui import QAction, QIcon

from PyQt6.QtWidgets import (

QApplication,

QCheckBox,

QLabel,

QMainWindow,

QStatusBar,

QToolBar,

)

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

toolbar.setIconSize(QSize(16, 16))

self.addToolBar(toolbar)

button_action = QAction(QIcon("bug.png"), "&Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

button_action.setCheckable(True)

toolbar.addAction(button_action)

toolbar.addSeparator()

button_action2 = QAction(QIcon("bug.png"), "Your &button2", self)

button_action2.setStatusTip("This is your button2")

button_action2.triggered.connect(self.toolbar_button_clicked)

button_action2.setCheckable(True)

toolbar.addAction(button_action2)

toolbar.addWidget(QLabel("Hello"))

toolbar.addWidget(QCheckBox())

self.setStatusBar(QStatusBar(self))

def toolbar_button_clicked(self, s):

print("click", s)

app = QApplication([])

window = MainWindow()

window.show()

app.exec()

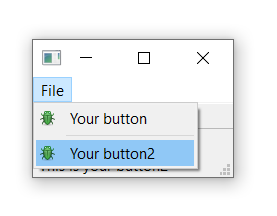

Run it! Now you see multiple buttons and a checkbox.

Toolbar with an action and two widgets.

Toolbar with an action and two widgets.

Menus

Menus are another standard component of UIs. Typically, they are at the top of the window or the top of a screen on macOS. They allow you to access all standard application functions. A few standard menus exist — for example File, Edit, Help. Menus can be nested to create hierarchical trees of functions, and they often support and display keyboard shortcuts for fast access to their functions.

Standard GUI elements - Menus

Standard GUI elements - Menus

Adding a Menu

To create a menu, we create a menubar we call menuBar() on the QMainWindow. We add a menu to our menu bar by calling addMenu(), passing in the name of the menu. I've called it '&File'. The ampersand defines a quick key to jump to this menu when pressing Alt.

This won't be visible on macOS. Note that this is different from a keyboard shortcut — we'll cover that shortly.

This is where the power of actions comes into play. We can reuse the already existing QAction to add the same function to the menu. To add an action, you call addAction() passing in one of our defined actions:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

toolbar.setIconSize(QSize(16, 16))

self.addToolBar(toolbar)

button_action = QAction(QIcon("bug.png"), "&Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

button_action.setCheckable(True)

toolbar.addAction(button_action)

toolbar.addSeparator()

button_action2 = QAction(QIcon("bug.png"), "Your &button2", self)

button_action2.setStatusTip("This is your button2")

button_action2.triggered.connect(self.toolbar_button_clicked)

button_action2.setCheckable(True)

toolbar.addAction(button_action2)

toolbar.addWidget(QLabel("Hello"))

toolbar.addWidget(QCheckBox())

self.setStatusBar(QStatusBar(self))

menu = self.menuBar()

file_menu = menu.addMenu("&File")

file_menu.addAction(button_action)

def toolbar_button_clicked(self, s):

print("click", s)

Run it! Click the item in the menu, and you will notice that it is toggleable — it inherits the features of the QAction.

Menu shown on the window -- on macOS this will be at the top of the screen.

Menu shown on the window -- on macOS this will be at the top of the screen.

Let's add some more things to the menu. Here, we'll add a separator to the menu, which will appear as a horizontal line in the menu, and then add the second QAction we created:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")

toolbar.setIconSize(QSize(16, 16))

self.addToolBar(toolbar)

button_action = QAction(QIcon("bug.png"), "&Your button", self)

button_action.setStatusTip("This is your button")

button_action.triggered.connect(self.toolbar_button_clicked)

button_action.setCheckable(True)

toolbar.addAction(button_action)

toolbar.addSeparator()

button_action2 = QAction(QIcon("bug.png"), "Your &button2", self)

button_action2.setStatusTip("This is your button2")

button_action2.triggered.connect(self.toolbar_button_clicked)

button_action2.setCheckable(True)

toolbar.addAction(button_action2)

toolbar.addWidget(QLabel("Hello"))

toolbar.addWidget(QCheckBox())

self.setStatusBar(QStatusBar(self))

menu = self.menuBar()

file_menu = menu.addMenu("&File")

file_menu.addAction(button_action)

file_menu.addSeparator()

file_menu.addAction(button_action2)

def toolbar_button_clicked(self, s):

print("click", s)

Run it! You should see two menu items with a line between them.

Our actions showing in the menu.

Our actions showing in the menu.

You can also use ampersand to add accelerator keys to the menu to allow a single key to be used to jump to a menu item when it is open. Again this doesn't work on macOS.

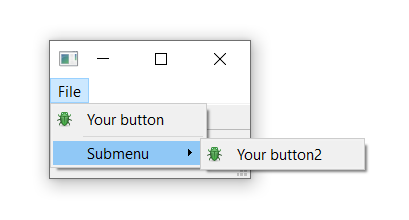

To add a submenu, you simply create a new menu by calling addMenu() on the parent menu. You can then add actions to it as usual. For example:

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("My App")

label = QLabel("Hello!")

label.setAlignment(Qt.AlignmentFlag.AlignCenter)

self.setCentralWidget(label)

toolbar = QToolBar("My main toolbar")